What Engineers Actually Struggle With in Kafka: QCon London 2025

What software engineers, architects, and platform teams told us about the real challenges of scaling Kafka—straight from the floor at QCon London 2025.

QCon London brings together senior software engineers, architects, and tech leaders to discuss architecture, scale, and emerging patterns. At the 2025 edition, tracks like Architectures You've Always Wondered About, Modern Data Architectures, and AI and ML for Software Engineers all converged on the same themes: moving data in real time, keeping it secure, and making it usable across teams. Data streaming is no longer niche. It's becoming the backbone of modern systems.

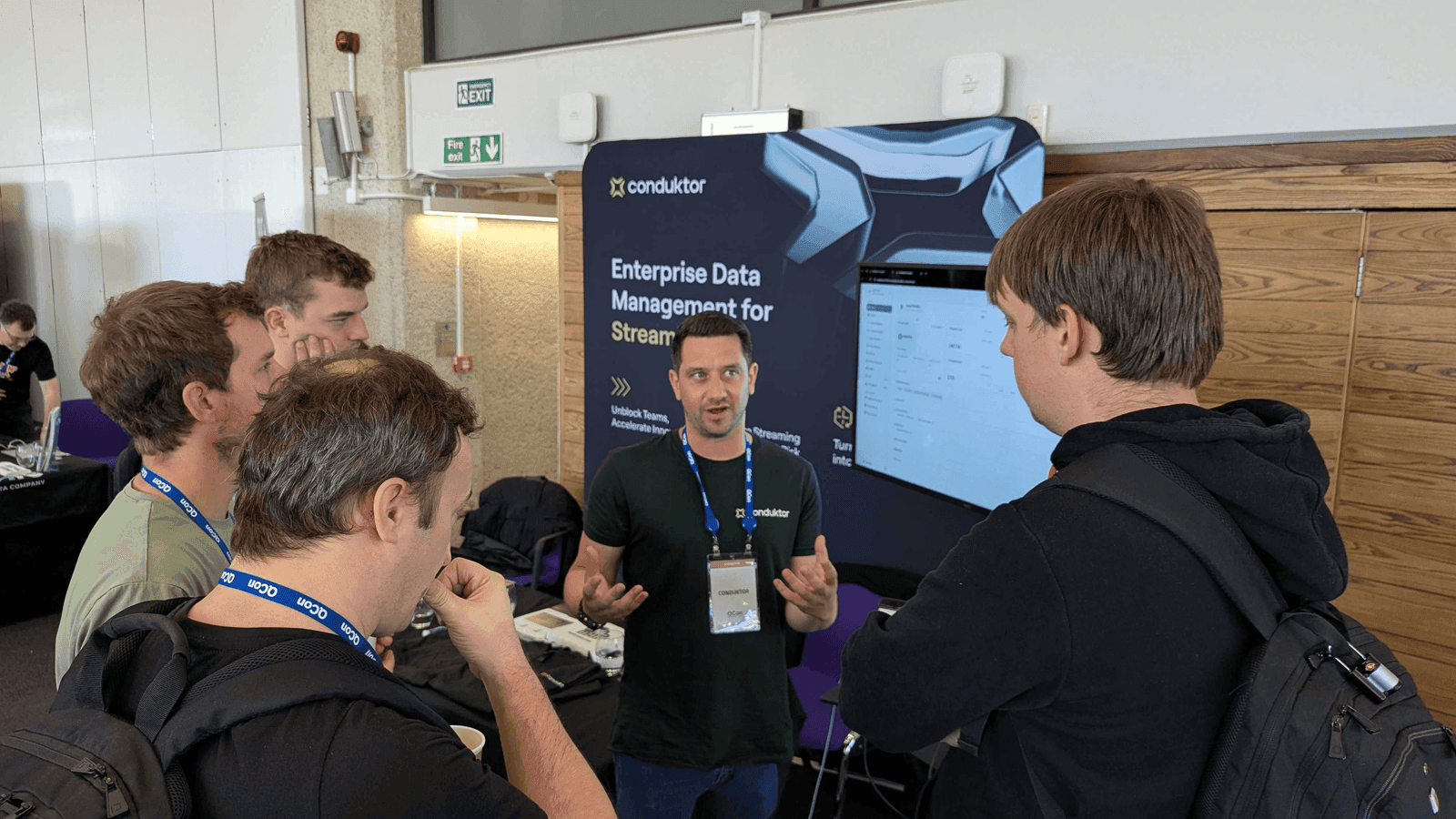

We sponsored QCon to talk directly to people living these streaming challenges. Software architects, platform teams, and developers stopped by our booth with real problems: access bottlenecks, brittle custom platforms, governance gaps, security concerns, and quality issues killing AI initiatives. These aren't edge cases. They're the daily reality for teams trying to scale data streaming responsibly.

Teams Need Solutions That Match Their Kafka Maturity

Every team we talked to sits somewhere different on the Kafka maturity curve. Some are just starting. Others manage hundreds of topics and applications. The pattern is clear: solutions need to adapt to the team's current stage, not force them into lengthy, complicated deployments.

Many teams are just starting but grow fast. Platforms need to keep up.

Debugging Kafka Remains Painful

Even seasoned Kafka teams struggle to debug and operate cleanly. Between consumer lag, dead letters, and broken ACLs, teams waste time hunting across logs and disconnected tools. Kafka is fast. Understanding what's happening in real time? Still too slow.

You can't fix what you can't see. Teams waste hours or days chasing symptoms instead of fixing root causes. That kills trust, and it's expensive.

Self-Service Access Is Non-Negotiable

The loudest message from platform teams: engineers need self-service, and they need it fast. One organization told us it takes three weeks just to get access to a topic.

When platform teams become the bottleneck instead of the enabler, everyone pays. Developers get frustrated. Platform teams burn out. Time-to-market suffers. Everyone wants autonomy. No one wants chaos.

The longer self-service gets postponed, the more innovation gets throttled.

Homegrown Kafka Platforms Break Down at Scale

Many teams have tried building their own platform layer on top of Kafka. It starts simple, maybe a few scripts or access controls, but quickly becomes a Frankenstein system: brittle, inconsistent, and always back on the platform team's plate.

Platform teams have done incredible work building internal tooling, but maintaining it long-term takes time and resources. At scale, proven solutions free up platform teams to focus on what actually moves the needle.

Cross-Team Data Sharing Creates Governance Headaches

Teams want to share data across business units or with external partners. That means spinning up new clusters, replicating topics, and hoping nothing breaks. It's slow, messy, and creates new risks with every copy.

Everyone agrees Kafka should be shareable. The catch: no one wants to give up control over security or quality to make it happen.

External data sharing should enable growth, not create compliance headaches. If Kafka can't be governed, it can't scale outside the team that owns it.

Security Concerns Block Data Scientists From Live Streams

Data scientists are stuck waiting or working with stale data because exposing live streams is seen as too risky. Teams know the value of real-time data but can't deliver it safely. The cost: inaccurate AI outputs, lost trust, and missed opportunities.

When security blocks access, innovation stalls. Data science needs real-time, trusted data to build anything that matters.

Poor Data Quality Undermines AI Initiatives

AI is only as good as the data feeding it, and the source data isn't reliable enough. Teams see quality issues upstream rippling through downstream pipelines. Fields are missing. Formats are inconsistent. Context is gone.

Fixing it downstream costs more. They need control at the source.

Poor data quality isn't just a tech problem. It kills AI accuracy, slows adoption, and undermines machine learning's entire promise.

Where Conduktor Fits In

The conversations at QCon made one thing clear: data streaming is growing fast, and so are the challenges around control, access, and scale. Teams need better ways to manage Kafka and increase adoption without slowing down development or compromising security.

That's our focus. At the booth, we showed dozens of demos of how Conduktor makes it easier to troubleshoot, govern, and grow Kafka, regardless of where you are in the journey.