Field-Level Encryption in Kafka: Why DIY Libraries Become Tech Debt

DIY Kafka encryption libraries start simple, then become multi-language, multi-KMS nightmares. Proxy-layer encryption is the better path.

Kafka Adoption Starts Small, Then Gets Serious

Kafka adoption follows a predictable pattern. A team tackles a simple use case: log management or telemetry. Confidence grows. Proficiency deepens. The team moves onto business applications.

Then security becomes mandatory:

- Data privacy

- GDPR compliance

- Data leakage prevention

- Cyber-threat protection

Time to encrypt.

Kafka Secures Transit, Not Fields at Rest

Kafka handles network communications with TLS and mTLS. Data in transit is locked down.

Data at rest is another story. Kafka has no native field-level encryption. Your PII and GDPR-regulated fields sit exposed.

Field-level encryption also enables crypto shredding: encrypt data, then delete the key when you need to purge it. No data recovery possible. Crypto shredding satisfies data deletion requirements without touching the original records.

Before field-level encryption, engineers duplicated data into new topics, building Spring Boot or Kafka Streams applications just to strip fields that another team shouldn't access. That approach is obsolete. With encryption, the original topic becomes the single source of truth. Each consumer gets customized visibility:

- View data with encrypted fields

- See partially decrypted data

- Access fully decrypted data

This gap in Kafka's security model cannot be ignored. Field-level encryption is a requirement for protecting sensitive data from unauthorized access.

The DIY Library Trap: From POC to Enterprise Nightmare

Your CSO defines fields to encrypt. You're a capable engineer. Time to write a library in Java.

Version 1.0 ships quickly. Documentation takes time. Integration tests are painful. But it works.

Schema Registry Support

Teams request Schema Registry integration. You add support for:

- Avro

- JSON Schema

- Protocol Buffer

Version 1.1 maintains backward compatibility. Teams don't need to migrate immediately.

Users report a bug: nested field encryption doesn't work. Version 1.2 fixes it.

Your Security team notices the library's adoption. They want it integrated with the enterprise KMS for audit trails. They provide access credentials. You add KMS support.

Python Enters the Game

A team requests Python support. Your library is Java-only. You don't know Python. You recruit a Python-familiar team and port the library.

Version 1.3 ships in both Java and Python.

Testing reveals a problem: data encrypted with Python can't be decrypted by Java. You add cross-language end-to-end tests.

Another request: key rotation management. This requires storing keys in record headers. Both libraries need updates. You create a compatibility matrix for users.

The time investment exceeds expectations. But you're delivering value. You build a comprehensive test harness.

The Compatibility Matrix Explodes

Your responsiveness drives adoption. More teams onboard. They bring their preferences:

- Languages: Go, C#, Rust, Node.js

- KMS providers: Vault, Azure Key Vault, GCP KMS, AWS KMS, Thales

- Custom encryption requirements

The compatibility matrix becomes a beast. Tickets still arrive for version 1.0 bugs.

Your efficient library has become enterprise infrastructure with substantial maintenance costs. You've created technical debt. There's no easy exit.

Success for Everyone Except You

The workload is unsustainable. New tasks pile up. The pressure is relentless. Data volumes through your library keep growing.

Sound familiar? We should talk.

The library is a technical success. The real failure is governance.

The Missing Governance Layer

Team autonomy for encryption implementation creates two overlooked problems:

- KMS access management: secrets, tokens, credentials

- Encryption definition ownership: where is the truth, who controls it

Every team needs KMS access (custom credentials, Service Accounts). Deployments become complex. Integration tests become harder.

Who knows all the rules? Where are encryption definitions stored? Who owns them? Are they consistent across the organization? What happens when your CSO adds or removes a rule?

End-to-end encryption is a coordination problem, not just a technical one.

Gateway Approach: Encryption Without Library Overhead

Conduktor Gateway handles encryption at the proxy layer. No application changes required.

Key characteristics:

- Language agnostic (works with

kafka-console-consumer.sh) - Centralized KMS access, only Gateway needs credentials

- No secret management burden on application teams

- No cross-language interoperability issues

- No deployment or integration testing complexity

Developers and applications change nothing. Security configuration happens in one place.

Role Separation That Works

Each team focuses on their domain:

- Developers work with data. No library dependencies, no versioning conflicts, full language compatibility.

- Security Engineers define and apply encryption rules. All configuration in one location.

- Ops Engineers bridge the gap. They make the machinery invisible to everyone else.

Encryption Configuration Example

Security team defines encryption for password and visa fields in topic customers.

Define encryption rules for produce requests:

docker compose exec kafka-client curl \

--silent \

--request POST "gateway:8888/tenant/tom/feature/encryption" \

--user "superUser:superUser" \

--header 'Content-Type: application/json' \

--data-raw '{

"config": {

"topic": "customers",

"fields": [

{

"fieldName": "password",

"keySecretId": "secret-key-password",

"algorithm": {

"type": "TINK/AES_GCM",

"kms": "TINK/KMS_INMEM"

}

},

{

"fieldName": "visa",

"keySecretId": "secret-key-visaNumber",

"algorithm": {

"type": "TINK/AES_GCM",

"kms": "TINK/KMS_INMEM"

}

}

]

},

"direction": "REQUEST",

"apiKeys": "PRODUCE"

}'

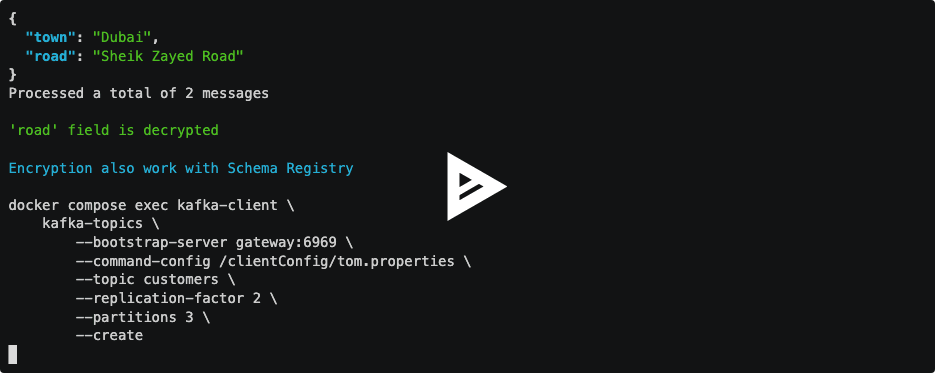

# "SUCCESS"Developers create the topic and produce data with standard tools. No custom serializers, no Java agents, no libraries, no interceptors:

docker compose exec kafka-client \

kafka-topics \

--bootstrap-server gateway:6969 \

--command-config /clientConfig/tom.properties \

--topic customers \

--replication-factor 2 \

--partitions 3 \

--create

# Created topic customers.Produce JSON data:

echo '{

"name": "tom",

"username": "tom@conduktor.io",

"password": "motorhead",

"visa": "#abc123",

"address": "Chancery lane, London"

}' | jq -c | docker compose exec -T schema-registry \

kafka-json-schema-console-producer \

--bootstrap-server gateway:6969 \

--producer.config /clientConfig/tom.properties \

--topic customers \

--property value.schema='{

"title": "Customer",

"type": "object",

"properties": {

"name": { "type": "string" },

"username": { "type": "string" },

"password": { "type": "string" },

"visa": { "type": "string" },

"address": { "type": "string" }

}

}'Consume and verify encryption:

docker compose exec schema-registry \

kafka-json-schema-console-consumer \

--bootstrap-server gateway:6969 \

--consumer.config /clientConfig/tom.properties \

--topic customers \

--from-beginning \

--max-messages 1 | jq .Output shows encrypted fields:

{

"name": "tom",

"username": "tom@conduktor.io",

"password": "AQEzYlKrLZrtxU9jqCJPLggBbx6T+quj2NVsMcJ4zVhcvi77ZaT3wnYleSBYuuqJxQ==",

"visa": "AURBygF0lxL3x1Tmq0Nv7gSbX4cyEIqytG+5+7BawKllrQm/T9GS38Ty/E1Jh3M=",

"address": "Chancery lane, London"

}Fields password and visa are encrypted.

Define decryption rules for fetch responses:

docker compose exec kafka-client curl \

--silent \

--request POST "gateway:8888/tenant/tom/feature/decryption" \

--user "superUser:superUser" \

--header 'Content-Type: application/json' \

--data-raw '{

"config": {

"topic": "customers",

"fields": [

{

"fieldName": "password",

"keySecretId": "secret-key-password",

"algorithm": {

"type": "TINK/AES_GCM",

"kms": "TINK/KMS_INMEM"

}

},

{

"fieldName": "visa",

"keySecretId": "secret-key-visaNumber",

"algorithm": {

"type": "TINK/AES_GCM",

"kms": "TINK/KMS_INMEM"

}

}

]

},

"direction": "RESPONSE",

"apiKeys": "FETCH"

}'

# "SUCCESS"Consume again with decryption enabled:

docker compose exec schema-registry \

kafka-json-schema-console-consumer \

--bootstrap-server gateway:6969 \

--consumer.config /clientConfig/tom.properties \

--topic customers \

--from-beginning \

--max-messages 1 | jq .Output shows decrypted fields:

{

"name": "tom",

"username": "tom@conduktor.io",

"password": "motorhead",

"visa": "#abc123",

"address": "Chancery lane, London"

}Data decrypted. No application changes required. Data encrypted at rest.

Stop Building, Start Encrypting

Field-level encryption is a requirement, not a feature. The question is how you implement it.

DIY libraries trade one problem for another: you solve encryption but create a maintenance burden that grows with every language, KMS, and schema format your organization adopts. Governance becomes impossible when every team manages their own keys and encryption logic.

Proxy-layer encryption centralizes control without touching application code. Security teams define rules once. Developers keep shipping. Ops stays sane.

If your Kafka clusters handle sensitive data and you're weighing your encryption options, let's talk.