Stop Building Kafka Encryption Libraries

Client-side encryption creates ungovernable sprawl. Proxy-layer encryption centralizes control and makes Kafka a dumb pipe.

Your Kafka cluster probably started small—logs, metrics, internal events. Then it worked, so teams trusted it with more: customer records, transactions, healthcare data.

Now you have a problem.

Kafka encrypts data in transit with TLS. But data at rest? It sits in topics as plaintext. Every message on disk is readable by anyone with broker access—operators, backup systems, compromised infrastructure.

The natural instinct: build an encryption library. I've seen this pattern dozens of times. It always ends the same way.

Conduktor simplifies things; and we need this simplicity in our landscape. It helps speed up our daily operations, and helps us with credit card data (PCI DSS) by encrypting the topics. Conduktor, in one sentence, for me, is Kafka made simpler.

Marcos Rodriguez, Domain Architect at Lufthansa

Why Disk Encryption Doesn't Protect Your Kafka Data

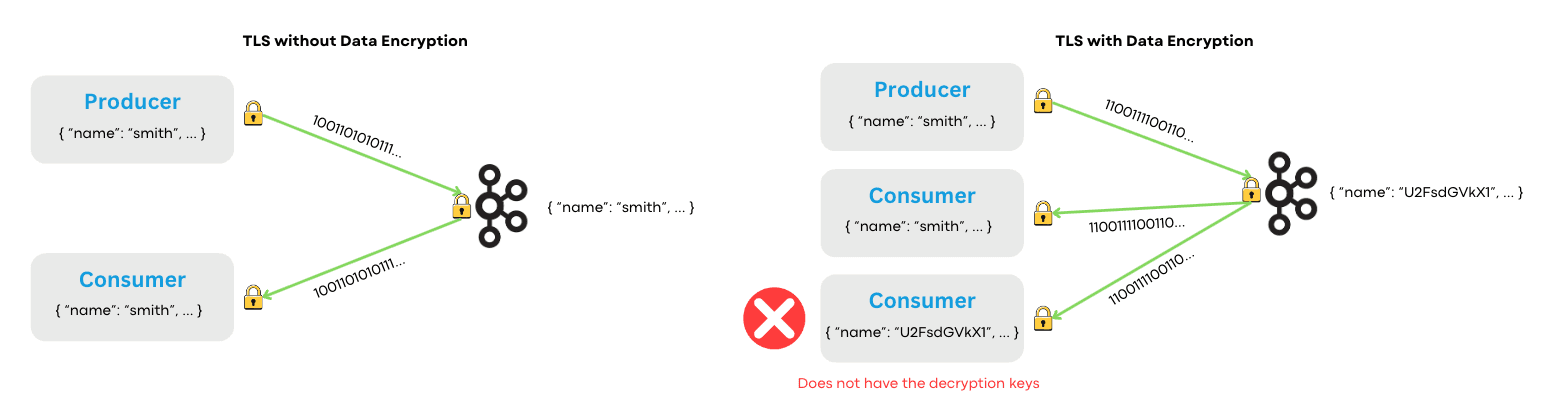

Kafka natively supports encryption for data in transit. TLS or mTLS secures data as it moves between producers, brokers, and consumers. Authentication and authorization work through SASL mechanisms and ACLs.

Kafka does not protect data at rest.

Yes, managed providers offer disk encryption. AWS MSK encrypts with KMS. Confluent Cloud encrypts storage. But this protects against physical disk theft—not much else. The provider manages those keys. Their systems decrypt data to process it. Any process with filesystem access can read your messages.

Even with disk encryption, you have no field-level control, no audit trail on who accessed what, and no way to delete a single customer's data without deleting the entire topic.

For businesses handling PII, PHI, or financial data, this gap triggers compliance failures under GDPR, CCPA, and HIPAA.

Why Client-Side Encryption Libraries Become Unmaintainable

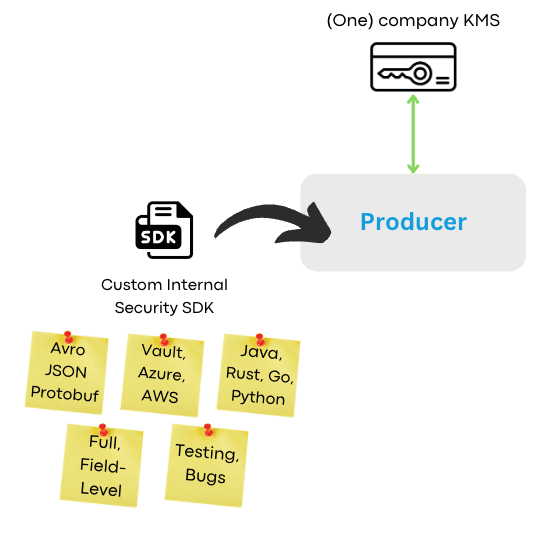

Many teams attempt to build their own encryption on the application side. They create internal libraries that encrypt data—full payload or field-level—before sending to Kafka. At first, this works: a basic library handles encryption, integrates with the Key Management System (KMS), and sends encrypted data to Kafka.

Then reality sets in. As more teams adopt the library, feature requests pile up:

- Support for different serialization formats (Avro, JSON Schema, Protobuf)

- Integration with multiple KMS providers (Vault, Azure, AWS)

- Compatibility across programming languages (Java, Go, Python, Rust)

Maintaining this custom library becomes a full-time job:

- Version fragmentation: Java 8 vs Java 21, Python 3.8 vs 3.12

- Framework support: Spark, Flink, legacy applications, CDC pipelines

- Testing burden: All permutations of languages, versions, and features

- Upgrade lag: Applications not updating to patched versions

- Bug surface: More code means more bugs

But the real problem isn't maintenance. It's governance.

With client-side encryption, every application holds encryption keys. Every team implements (or misimplements) security logic. Key rotation requires coordinating dozens of deployments. Auditing means inspecting every application individually. One misconfigured service can leak plaintext to a topic that should be encrypted.

Client-side encryption is a governance nightmare. You trade one security problem (unencrypted data) for another (uncontrolled key sprawl and inconsistent enforcement).

Proxy-Layer Encryption: Centralized Control

I believe proxy-layer encryption is the only approach that scales.

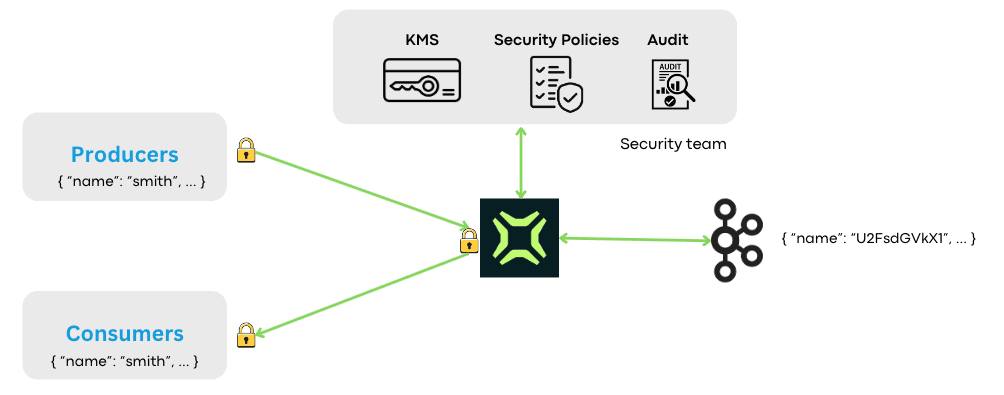

Conduktor Gateway sits between your Kafka clients and brokers. Clients connect to Gateway as if it were Kafka. Gateway connects to the actual Kafka cluster. Encryption happens at this layer—no producer or consumer code changes.

Producer → Gateway (encrypt) → Kafka Broker (ciphertext only)

↓

Consumer ← Gateway (decrypt) ← Kafka Broker (ciphertext only)The Kafka broker never sees plaintext. It stores ciphertext, replicates ciphertext, and serves ciphertext.

This architecture shift changes the security model fundamentally:

- Zero code changes: Existing producers and consumers work unchanged

- Centralized key management: Keys live in your KMS (Vault, AWS KMS, Azure Key Vault). No keys in application configs

- Instant policy updates: Change encryption rules once; all traffic inherits the update

- Consistent enforcement: Security teams define policy, developers ship features

- No reliance on developers implementing security correctly

For details on the architecture, see how Conduktor works and our encryption tutorial.

How Gateway Manages Keys, Policies, and Auditing

Data encryption isn't just about securing information. It's about managing encryption policies and keys in a consistent, auditable, maintainable way. A decentralized model—where each team handles encryption independently—leads to inconsistency and lost credentials.

KMS Integration

Gateway integrates with HashiCorp Vault (Enterprise and Community), AWS KMS (including customer-managed CMKs), Azure Key Vault, and GCP Cloud KMS. HSM-backed keys are supported. Keys never leave your KMS—Gateway uses envelope encryption where data is encrypted with a Data Encryption Key (DEK), and DEKs are encrypted with a Key Encryption Key (KEK) stored in your KMS.

Real-Time Policy Adjustments

Centralized encryption means policies update once and apply instantly. No redeployment of applications. No coordinating releases across teams.

Crypto Shredding

Gateway enables compliance-friendly data deletion by discarding encryption keys. When GDPR or CCPA demands data deletion, delete the key and all associated data becomes permanently unreadable—without touching Kafka's immutable log.

Unified Auditing

Tracking which data was accessed, when, and by whom is unified under a single framework. Security teams gain visibility across the entire Kafka ecosystem without manually inspecting each application.

Encrypt Specific Fields While Keeping Others Readable

Not all data needs equal protection. A customer_event message might contain:

{

"name": "Jane Doe",

"email": "jane@example.com",

"ssn": "123-45-6789",

"order_total": 149.99

}Gateway can encrypt only ssn and email while leaving order_total readable. Analytics pipelines process totals; PII stays protected.

Configuration is straightforward:

recordValue:

fields:

- fieldName: "ssn"

keySecretId: "pii-key"

algorithm: AES256_GCM

- fieldName: "email"

keySecretId: "pii-key"

algorithm: AES256_GCMField paths support dot notation for nested objects (user.address.street) and bracket notation for arrays (transactions[0].amount).

Schema-Agnostic Encryption

For environments where data schemas evolve, encryption continues uninterrupted even if the data structure changes. New fields can be added to encryption policies without redeploying producers.

Just-in-Time Decryption

Different consumers can receive different views of the data. An external partner's API client receives ssn: "[ENCRYPTED]". Your internal fraud team gets the full record. Same topic, no duplication, access controlled by policy.

Why Client-Side Always Fails at Scale

| Capability | Proxy-Layer | Client-Side |

|---|---|---|

| Centralized governance | Yes | No |

| Code changes required | No | Yes |

| Works with all applications | Yes | No |

| Key management | Centralized via KMS | Distributed across clients |

| Policy enforcement | Real-time updates | Requires redeployment |

| Auditing | Unified logs | Fragmented |

| Developer overhead | None | High |

| Credential sprawl | Contained | Uncontrolled |

Skip the painful middle years. Start with proxy-layer encryption.

How Proxy Encryption Makes Kafka Providers Interchangeable

When encryption happens before data reaches the broker, and keys live in systems you control, the provider becomes interchangeable infrastructure.

Want to migrate from Confluent Cloud to AWS MSK? Change a connection string. The ciphertext is byte-for-byte identical. The keys don't change. Client applications don't change.

This is the real value of proxy-layer encryption: provider independence. Your security posture remains constant regardless of which Kafka provider you choose.

Try Conduktor Gateway Encryption

Securing data at rest in Kafka requires a decision: build and maintain client-side encryption across every application, or centralize encryption at the proxy layer.

Conduktor's approach—field-level encryption, crypto shredding, envelope encryption with your KMS—eliminates the technical debt of DIY solutions while meeting compliance requirements.

Book a demo to see how Conduktor handles encryption for your Kafka environment, or explore the encryption tutorial.