BYOC, Storage-Compute Separation, and Shift Left Are Reshaping Data Streaming

BYOC, decoupling compute from storage, and Shift Left strategies dominate data streaming. Insights from Aiven and Conduktor on AI-driven real-time data.

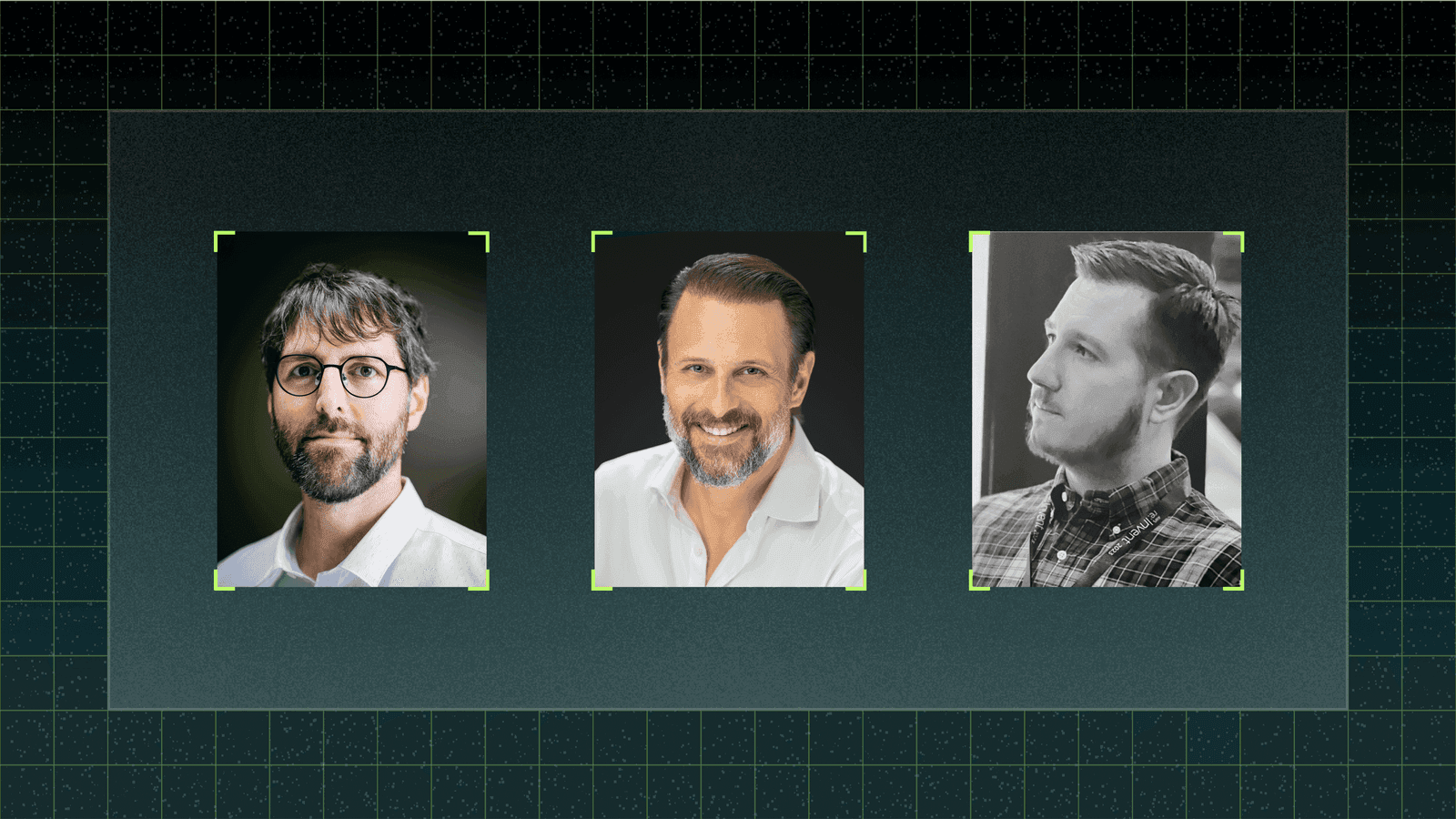

Conduktor launched Streamline: Conversations in Data Series with an episode on data streaming trends. Quentin Packard (VP Sales, Conduktor) moderated a discussion with Philip Yonov (Head of Streaming Data, Aiven) and Stéphane Derosiaux (CTO & Co-Founder, Conduktor).

BYOC Gives Organizations Control Over Their Data and Costs

"Bring Your Own Cloud" lets organizations keep data in their own cloud accounts while using managed services. Two drivers are pushing adoption: data privacy and infrastructure costs.

Philip: "Streaming data is premium data, and BYOC allows for cost-effective infrastructure that doesn't compromise on security."

For large deployments, BYOC unlocks cloud provider savings plans that managed SaaS cannot access. Stéphane pointed out another angle: AI platforms potentially training on customer data. BYOC keeps sensitive data in your perimeter.

BYOC works best for organizations with large, sensitive data streams who want both control and managed tooling.

Separating Compute from Storage Cuts Costs as Data Volumes Grow

Data volumes keep growing, but traditional architectures couple storage and compute. You cannot scale one without scaling the other. This wastes money.

Stéphane: "We keep accumulating massive amounts of data, but traditionally, storage and compute grew together, leading to inefficiencies. By decoupling them, we can store data on platforms like S3 while using lightweight, scalable compute resources only when necessary."

Philip added that separation enables better throughput optimization. It also opens the door to globally distributed streaming with higher availability.

For real-time workloads with growing data volumes, decoupling compute and storage directly reduces infrastructure costs.

Shift Left Means Catching Data Quality Problems at the Source

"Shift Left" pushes data quality, security, and testing earlier in the development cycle. In real-time streaming, this matters because errors propagate fast.

Philip had a contrarian view: "When I hear 'Shift Left,' I think, stop with the marketing! In reality, Kafka is already left, it operates in real-time and interacts with business processes at the microservice level."

His real point: focus on "zero copy" architectures to minimize data movement across systems. Data movement remains one of the most expensive operations in cloud environments.

Stéphane emphasized that developers need tools for data security and quality that do not overwhelm them. The goal is catching problems early without adding friction.

LLMs Will Embed Directly Into Stream Processing

The conversation turned to AI and real-time data management.

Stéphane: "Imagine plugging LLMs directly into your stream processing. As costs decrease and latency improves, it's only a matter of time before we see AI-driven insights embedded within real-time streaming systems."

Declining inference costs and improving latency will make this practical sooner than most expect.

Organizations Adopting These Patterns Now Will Have an Advantage

BYOC, compute-storage separation, and Shift Left are not theoretical. They are production patterns that reduce costs and improve data quality. With AI-powered stream processing on the horizon, organizations building this foundation today will be ready to capitalize on the next wave.