Data Governance Framework: Roles and Responsibilities

Implementing an effective data governance framework requires more than just policies and procedures, it demands clear definition of roles and responsibilities across your organization. Without well-defined accountability, even the most sophisticated governance strategies fail to deliver value. This guide explores the essential roles within a data governance framework and how they work together to ensure data quality, compliance, and strategic value.

Why Roles Matter in Data Governance

Data governance is inherently cross-functional. Data flows through multiple departments, systems, and stakeholders, each with different perspectives and priorities. A clear role structure ensures that everyone understands their responsibilities, decision-making authority, and accountability for data-related outcomes.

When roles are ambiguous, organizations face several challenges:

- Data quality issues persist without clear ownership

- Compliance gaps emerge from unclear accountability

- Strategic initiatives stall due to decision-making bottlenecks

- Teams duplicate efforts or work at cross-purposes

A well-structured framework assigns responsibility while fostering collaboration, creating a culture where data is treated as a strategic asset.

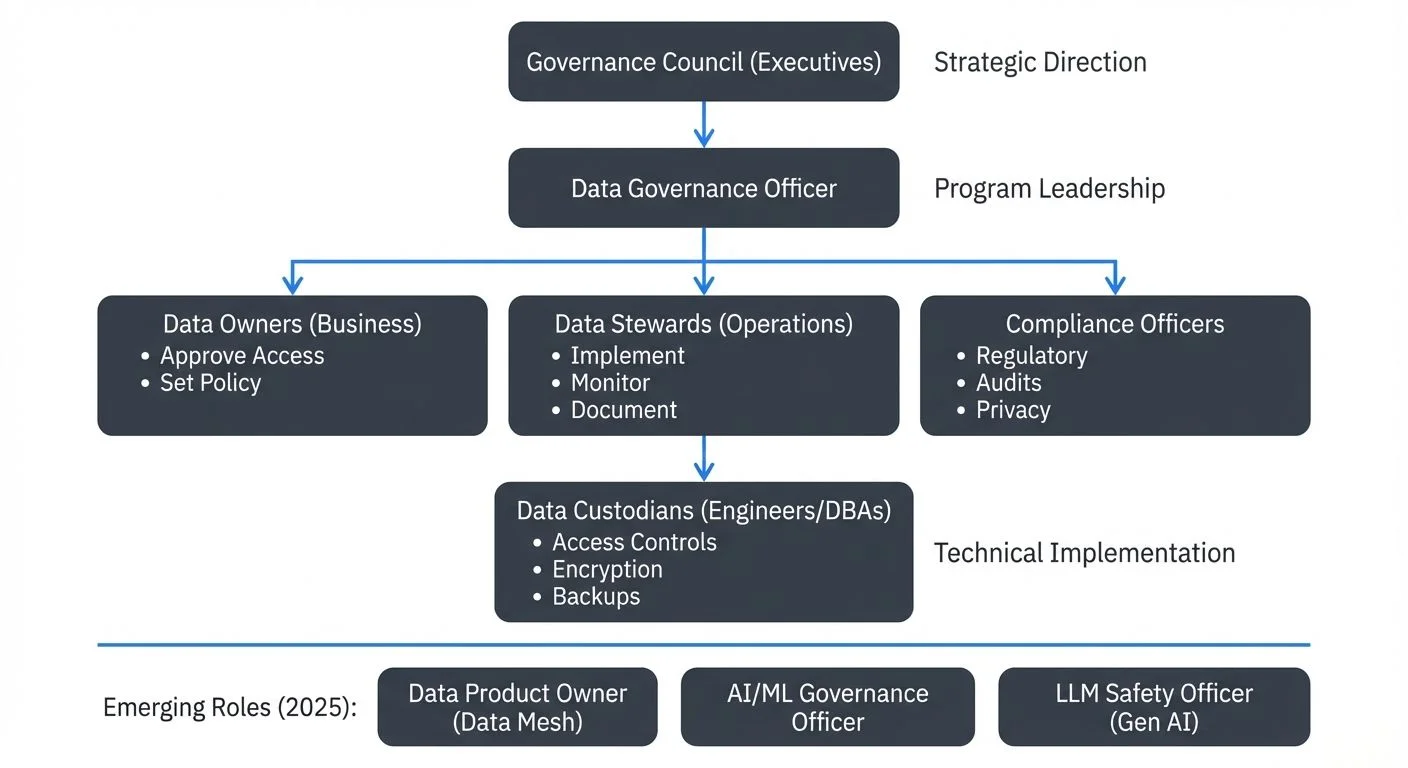

Role Overview at a Glance

Before diving into details, here's a quick comparison of key governance roles:

| Role | Primary Focus | Decision Authority | Time Commitment | Technical Depth |

|---|---|---|---|---|

| Data Governance Council | Strategic direction | High - approves policies | Quarterly meetings | Low - business focused |

| Data Governance Officer | Program leadership | Medium - coordinates execution | Full-time | Medium - bridging role |

| Data Owner | Domain accountability | High - approves access | Part-time oversight | Low - business domain expert |

| Data Steward | Operational execution | Medium - implements policies | Full-time or significant part-time | Medium-High - domain + technical |

| Data Custodian | Technical implementation | Low - follows policies | Full-time | High - infrastructure specialist |

| Compliance Officer | Regulatory alignment | Medium - defines requirements | Full-time | Medium - regulatory + technical |

| Data Product Owner | Product management | High - within domain | Full-time | Medium-High - product + technical |

| AI/ML Governance Officer | Model governance | Medium - ML-specific policies | Full-time | High - ML/AI specialist |

Core Governance Roles

Data Governance Council

The Data Governance Council sits at the top of the governance hierarchy, providing strategic direction and executive sponsorship. Typically composed of senior leaders including the CTO, Chief Data Officer, and heads of key business units, this council sets policies, approves standards, and allocates resources for governance initiatives.

Key Responsibilities:

- Define governance strategy aligned with business objectives

- Approve data policies, standards, and procedures

- Resolve escalated issues and conflicts

- Monitor governance program effectiveness

- Ensure adequate funding and resources

The council meets quarterly or bi-annually, focusing on strategic decisions rather than day-to-day operations. Their visible commitment signals to the organization that data governance is a business priority, not just an IT initiative.

Data Governance Officer

The Data Governance Officer (DGO) serves as the operational leader of the governance program, translating executive vision into actionable initiatives. Reporting to the Chief Data Officer or CTO, the DGO coordinates between technical teams, business stakeholders, and compliance functions.

Key Responsibilities:

- Design and implement governance frameworks

- Facilitate governance council meetings and decisions

- Monitor compliance with data policies

- Coordinate training and awareness programs

- Track governance metrics and KPIs

- Manage relationships with data stewards and owners

For organizations managing streaming data platforms, the DGO must understand real-time data challenges, including event schema management, data lineage in stream processing, and access control for streaming topics. Modern governance platforms help DGOs visualize and manage streaming environments with tools like:

- OpenMetadata and DataHub for open-source data cataloging and lineage tracking

- Conduktor for Kafka cluster governance, monitoring, and stream governance

- Atlan and Collibra for enterprise-wide data governance with streaming integration

- Apache Atlas for metadata management in distributed data ecosystems

These tools provide centralized governance capabilities without becoming bottlenecks to real-time data flows. For detailed guidance on implementing governance for streaming data, see Building and Managing Data Products and Data Mesh Principles and Implementation.

Data Owners

Data Owners are senior business leaders accountable for specific data domains. A Chief Marketing Officer might own customer data, while a Chief Financial Officer owns financial data. This business-led ownership ensures that data governance serves business objectives rather than existing solely as a technical exercise.

Key Responsibilities:

- Define business rules and quality standards for their domain

- Approve access requests to sensitive data

- Set data retention and archival policies

- Accountable for compliance within their domain

- Allocate resources for data quality initiatives

Data Owners have decision-making authority but delegate day-to-day management to Data Stewards. Their involvement ensures governance decisions reflect business priorities and risk tolerance.

Data Stewards

Data Stewards are the operational champions of data governance, working closely with technical teams and business users. While Data Owners set policy, Data Stewards implement and enforce it. They serve as subject matter experts for their assigned data domains.

Key Responsibilities:

- Document data definitions, lineage, and business context

- Monitor data quality and investigate anomalies

- Coordinate data quality remediation efforts

- Maintain data catalogs and metadata

- Review and process access requests

- Provide training to data consumers

In streaming environments, Data Stewards manage schema registries (see Schema Registry and Schema Management), define topic naming conventions, and ensure proper data classification tags flow through Kafka topics. They work with platform teams to implement governance controls without impeding real-time data flows. For comprehensive approaches to data quality monitoring, see Building a Data Quality Framework and Automated Data Quality Testing.

Data Custodians

Data Custodians are technical professionals responsible for the physical management and security of data. Database administrators, platform engineers, and security specialists fill this role, implementing the technical controls that enforce governance policies.

Key Responsibilities:

- Implement access controls and encryption

- Manage backup and recovery procedures

- Monitor system performance and availability

- Apply security patches and updates

- Execute data retention and deletion procedures

For streaming platforms, Data Custodians configure authentication and authorization for Kafka clusters (running on Kafka 4.0+ with KRaft mode for simplified operations), implement encryption in transit and at rest, and manage disaster recovery procedures. Modern governance platforms like Conduktor enable Data Custodians to implement fine-grained access controls through intuitive interfaces (see Conduktor RBAC setup) and comprehensive audit logging (see Audit Logging for Streaming Platforms) without extensive custom development. For security implementation details, see Kafka ACLs and Authorization Patterns.

Compliance Officers

Compliance Officers ensure that data practices align with regulatory requirements such as GDPR, CCPA, HIPAA, and industry-specific regulations. They bridge governance and legal/regulatory functions, translating complex requirements into practical controls.

Key Responsibilities:

- Monitor regulatory landscape and assess impact

- Define compliance requirements for data handling

- Conduct privacy impact assessments

- Manage data breach response procedures

- Coordinate regulatory audits and reporting

- Review and approve data sharing agreements

In real-time data environments, Compliance Officers must address unique challenges such as ensuring the right to deletion in event streams, maintaining audit trails for streaming data access, and managing consent across distributed systems.

Emerging Roles in 2025

As data governance evolves to meet new technological challenges, several specialized roles have emerged or gained prominence:

Data Product Owner

In Data Mesh architectures, the Data Product Owner combines business domain expertise with technical understanding to manage data as a product. This role is central to federated data governance models where domains own and govern their own data products.

Key Responsibilities:

- Define data product strategy and roadmap

- Ensure data product meets quality, discoverability, and usability standards

- Own the data product lifecycle from creation to retirement

- Manage data product SLAs and consumer relationships

- Collaborate with platform teams on infrastructure needs

The Data Product Owner bridges traditional Data Owner and Data Steward responsibilities but with product management discipline. For detailed guidance, see Building and Managing Data Products and Data Product Governance.

AI/ML Governance Officer

With the explosion of machine learning and AI systems in 2024-2025, the AI/ML Governance Officer ensures responsible development and deployment of AI models. This role focuses on model governance, fairness, explainability, and ethical AI practices.

Key Responsibilities:

- Define and enforce ML model governance policies

- Oversee model risk management and validation

- Ensure model documentation and lineage tracking

- Monitor for model bias, drift, and fairness issues

- Coordinate responsible AI practices across teams

- Manage AI/ML compliance with emerging regulations

This role is particularly critical for organizations implementing LLM-powered applications, where data governance intersects with prompt engineering, fine-tuning datasets, and retrieval-augmented generation (RAG) pipelines.

LLM Safety and Ethics Officer

As organizations adopt Large Language Models (LLMs) and generative AI, a specialized role has emerged to govern these powerful technologies. The LLM Safety Officer focuses on preventing misuse, ensuring ethical deployment, and managing risks unique to generative AI.

Key Responsibilities:

- Define guardrails for LLM inputs and outputs

- Prevent training data contamination and leakage

- Monitor for jailbreak attempts and prompt injection attacks

- Ensure responsible use of synthetic data

- Manage consent for data used in model training

- Coordinate red-teaming exercises for AI safety

This role works closely with Compliance Officers on regulations like the EU AI Act and emerging AI governance frameworks.

Cloud FinOps Data Governance Specialist

As data infrastructure moves to cloud platforms, the intersection of financial operations and data governance requires specialized expertise. This role ensures cost-effective governance without sacrificing control.

Key Responsibilities:

- Optimize data storage costs while maintaining retention policies

- Monitor and control compute costs for data processing

- Implement data lifecycle management for cost optimization

- Balance data accessibility with storage tier economics

- Track governance-related infrastructure costs

This role is essential in organizations running large-scale streaming platforms where data retention and processing costs can escalate quickly.

Role Interactions in Practice

These roles don't operate in isolation. Consider a scenario where a marketing team wants to integrate real-time customer behavior data from a Kafka stream into their analytics platform:

- Data Steward receives the request and validates it aligns with customer data policies

- Compliance Officer reviews for privacy implications and consent requirements

- Data Owner approves based on business value and risk assessment

- Data Custodian implements technical access controls and monitoring

- Data Governance Officer tracks the request for metrics and identifies process improvements

This collaborative approach balances innovation with control, enabling teams to move quickly while maintaining governance standards.

Implementing Your Framework

Start by identifying executives who can serve on your Data Governance Council and champion the initiative. Select a Data Governance Officer with both technical understanding and business acumen. Identify natural Data Owners based on your organizational structure, and recruit Data Stewards from teams with strong domain knowledge.

Document roles clearly, but start with a lightweight framework that grows with your program maturity. Perfect is the enemy of good in governance, it's better to establish clear ownership for critical data domains and expand coverage over time.

Modern Implementation Approaches

For Traditional Centralized Governance:

- Implement a data catalog like OpenMetadata, Atlan, or Collibra

- Establish RACI matrices for each data domain

- Use tools like Apache Atlas for metadata management

- Focus on critical data assets first, then expand

For Data Mesh / Federated Governance:

- Start with defining data product standards

- Implement federated computational governance (policy as code)

- Use self-serve platforms for domain teams

- Balance autonomy with global standards

- See Data Mesh Principles and Implementation for detailed guidance

For Streaming Data Platforms:

- Invest in Kafka-native governance tools (Conduktor for comprehensive governance and monitoring)

- Implement schema governance early with Schema Registry

- Establish topic naming and tagging conventions

- Enable self-serve access with guardrails

- Track data lineage through stream processing pipelines

- See Schema Registry and Schema Management

For AI/ML Environments:

- Add ML model governance tools (MLflow, Weights & Biases with governance extensions)

- Implement feature store governance

- Establish model cards and documentation standards

- Create guardrails for LLM deployments

- Monitor for bias and drift continuously

Modern governance platforms provide role-based access control, automated lineage tracking, and policy enforcement without becoming bottlenecks. The key is choosing tools that fit your architecture, cloud-native for cloud workloads, streaming-native for real-time platforms, and federated for Data Mesh implementations.

Balancing Autonomy and Governance with Self-Service

Traditional governance frameworks create tension between control and agility, centralized approval processes slow development teams, leading to shadow IT or governance circumvention. Self-Service frameworks resolve this by enabling teams to manage resources independently through version-controlled configuration files (GitOps), with the platform automatically validating requests against governance policies before provisioning.

This approach eliminates bottlenecks while ensuring adherence to organizational standards. Version control creates audit trails for Compliance Officers, Data Owners retain approval authority through pull request workflows, and consistent policy enforcement eliminates manual configuration errors. For implementation guidance, see Self-Service.

Conclusion

A successful data governance framework depends on clearly defined roles working in concert. From strategic leadership in the Governance Council to operational execution by Data Stewards and Custodians, each role contributes to transforming data from a liability into a strategic asset.

As data environments evolve in 2025, new roles emerge to address contemporary challenges: Data Product Owners for Data Mesh architectures, AI/ML Governance Officers for responsible AI deployment, and LLM Safety Officers for generative AI governance. These specialized roles complement traditional governance structures, ensuring organizations can govern modern data architectures effectively.

Whether you're implementing centralized governance, adopting Data Mesh principles, managing streaming platforms, or deploying AI systems, the key is establishing clear accountability while enabling innovation. With the right roles, responsibilities, and modern tooling, data governance becomes an enabler rather than a bottleneck.

For related guidance on specific governance domains, see:

- Data Product Governance for product-centric governance

- Metadata Management: Technical vs Business Metadata for metadata governance

- What is a Data Catalog: Modern Data Discovery for discovery and cataloging

- Building a Business Glossary for Data Governance for semantic governance

Related Concepts

- Schema Registry and Schema Management - Centralized schema governance for streaming data

- Audit Logging for Streaming Platforms - Tracking data access and changes for compliance

- Data Contracts for Reliable Pipelines - Formal agreements between data producers and consumers

Sources and References

- DAMA-DMBOK Data Governance Framework - Industry-standard framework for data management and governance roles

- Data Governance Institute Best Practices - Comprehensive guide to data governance implementation

- Data Mesh Principles (Zhamak Dehghani) - Foundational article on federated data governance

- OpenMetadata Documentation - Open-source metadata management and governance platform

- Confluent Stream Governance - Kafka-native governance capabilities and best practices

- Microsoft Azure Purview Roles - Role-based access control for data governance platforms

- Collibra Data Governance Operating Model - Framework for defining governance roles and responsibilities

- GDPR Data Protection Roles - Regulatory requirements for data governance and compliance roles

- EU AI Act - Emerging AI governance regulation requiring specialized roles

- NIST AI Risk Management Framework - Framework for AI/ML governance and risk management

Written by Stéphane Derosiaux · Last updated January 25, 2026