December 2024

SQL on Kafka, cluster health dashboard, and shareable filters

Query Kafka data with SQL. View cluster health at a glance. Share consume page filters with teammates.

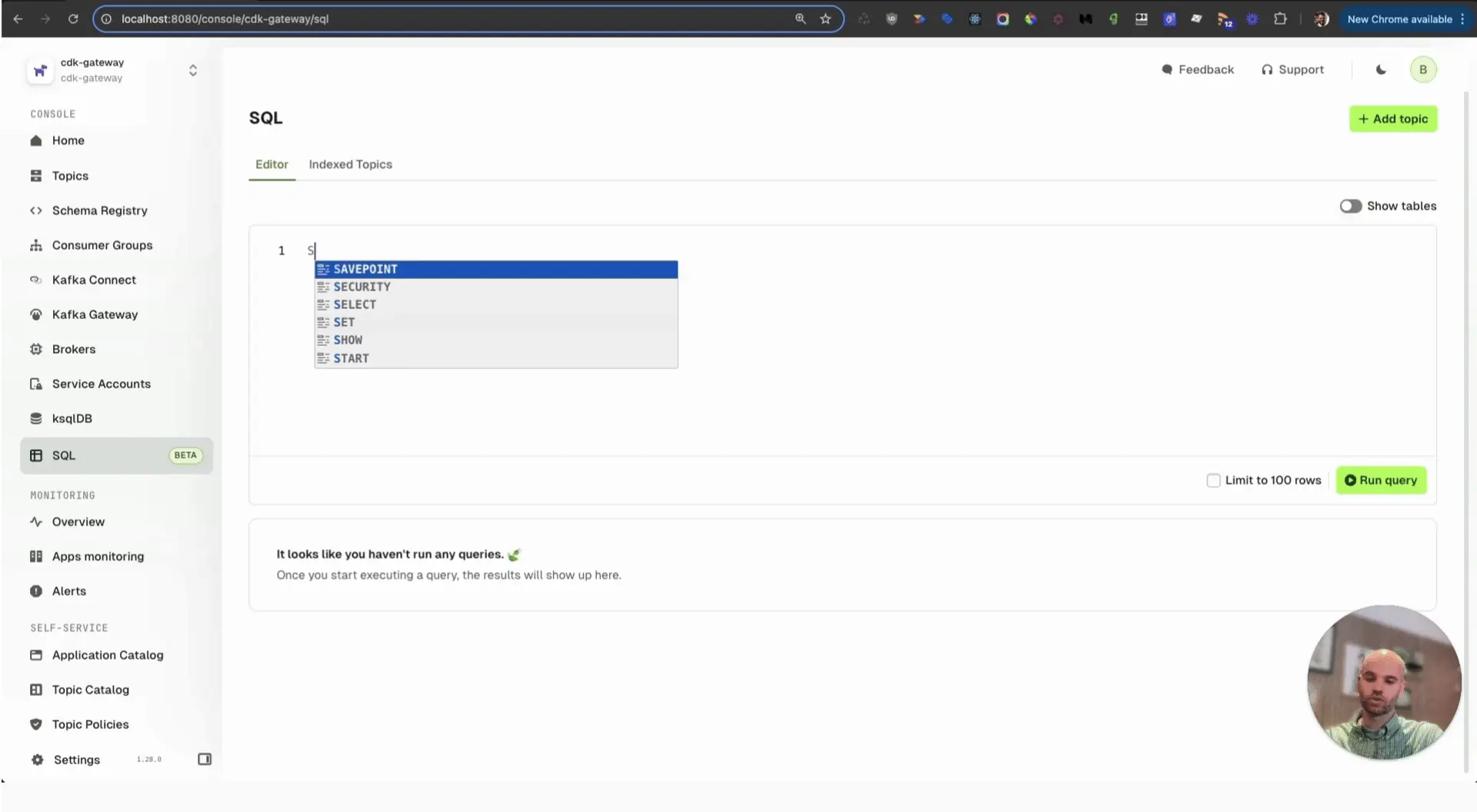

Query Kafka data with SQL

SQL on Kafka lets teams extract insights from real-time and historical data streams without duplicating data to external systems. Conduktor handles RBAC enforcement, controls which topics are indexed, and transforms Kafka data into a columnar format for querying.

Use cases by role:

- Data engineers: Explore raw streams, troubleshoot pipelines, debug messages with masking and access controls

- Business teams: Analyze orders and customer events on-demand without permanent storage

- Ops teams: Query metadata (timestamps, offsets, compression) to optimize performance and identify duplicates

Read more in Transforming Real-Time Data Into Instant Insights.

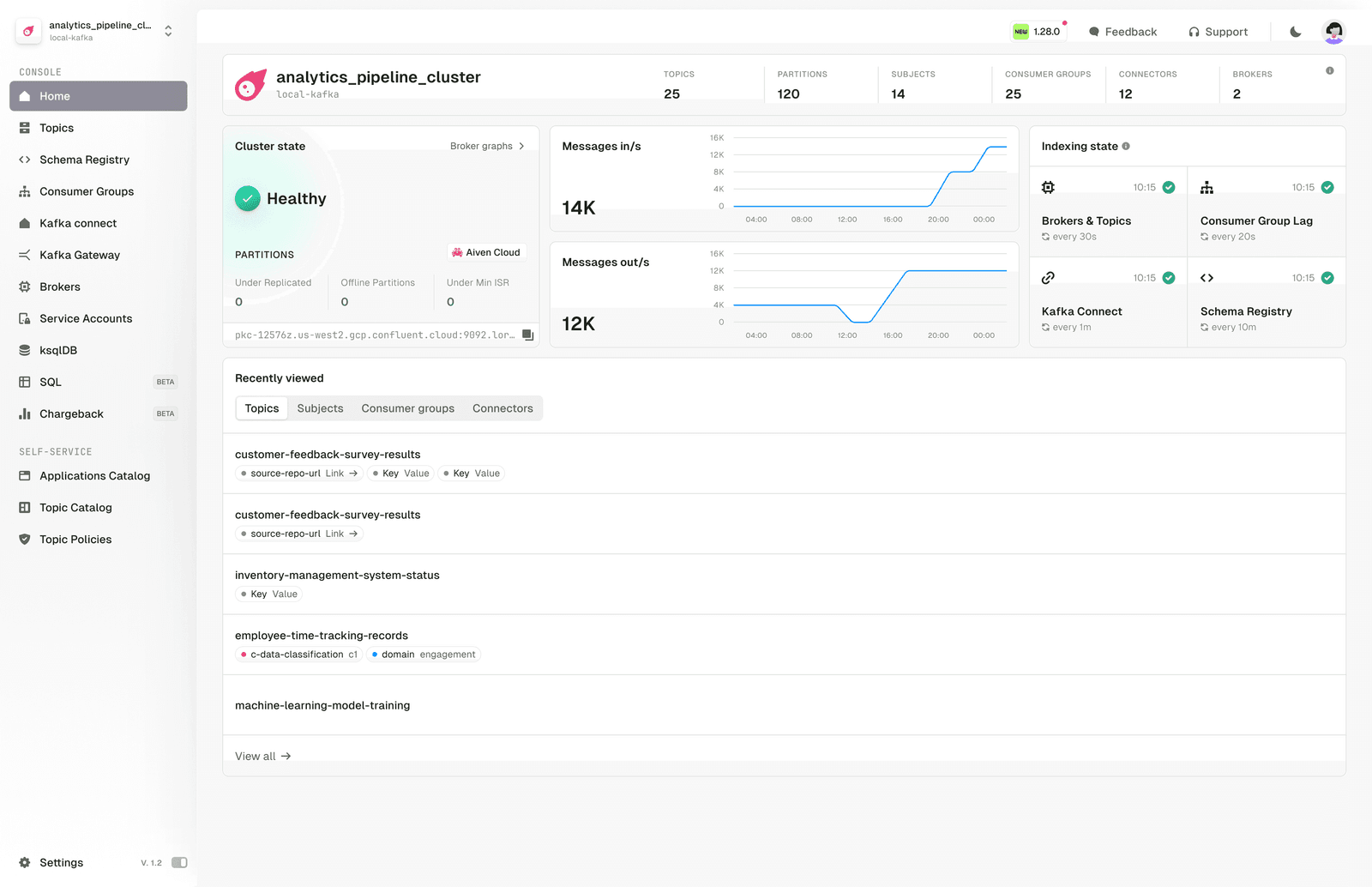

View cluster health at a glance

The redesigned homepage shows Kafka cluster health, indexing module status, and recently viewed resources.

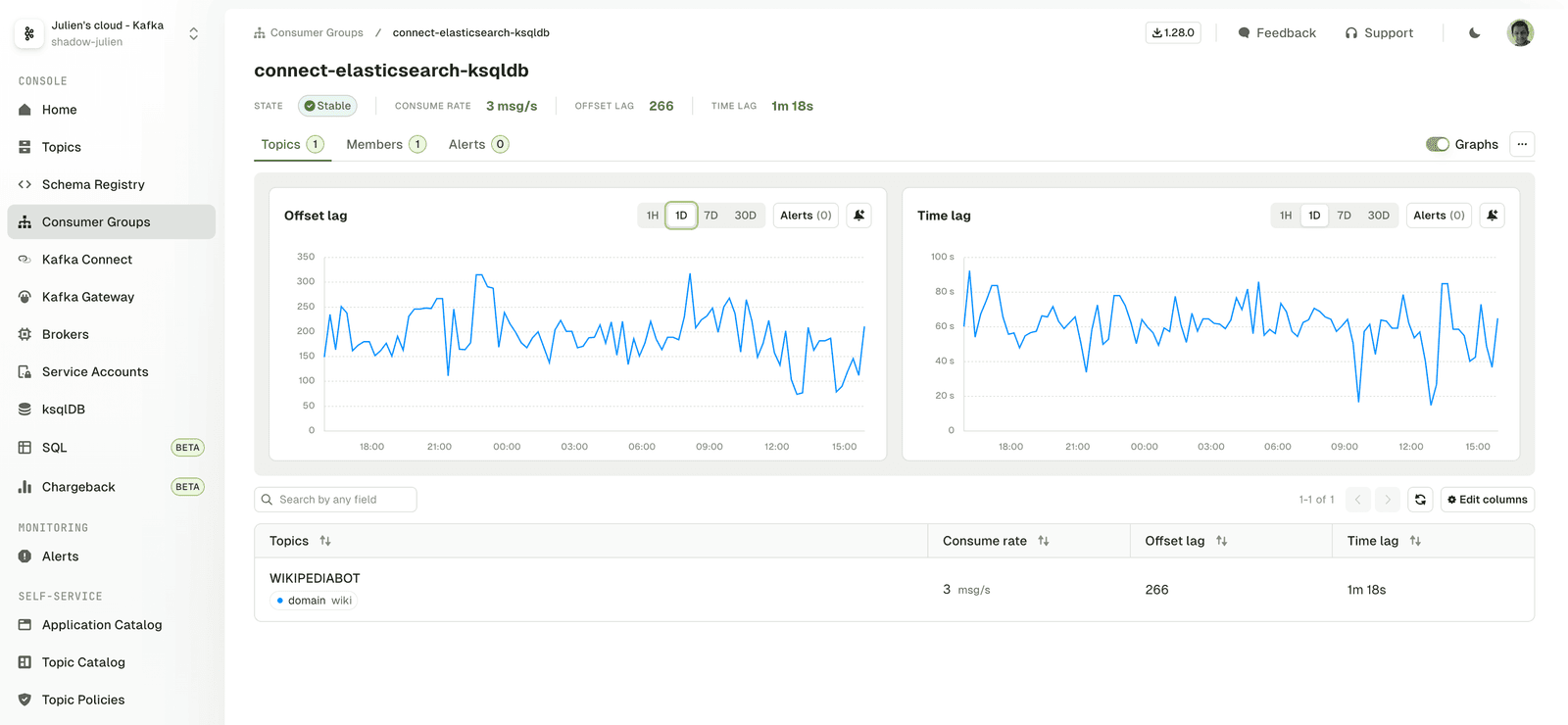

View consumer groups by topic or member

The Consumer Group page organizes data by subscribed topics or active members. Drill down to individual member or topic-partition assignments.

Control which topics appear in the catalog

Set catalogVisibility: PUBLIC or PRIVATE on topics to control what appears in the topic catalog.

apiVersion: kafka/v2

kind: Topic

metadata:

cluster: shadow-it

name: click.event-stream.avro

catalogVisibility: PUBLIC # or PRIVATE

spec:

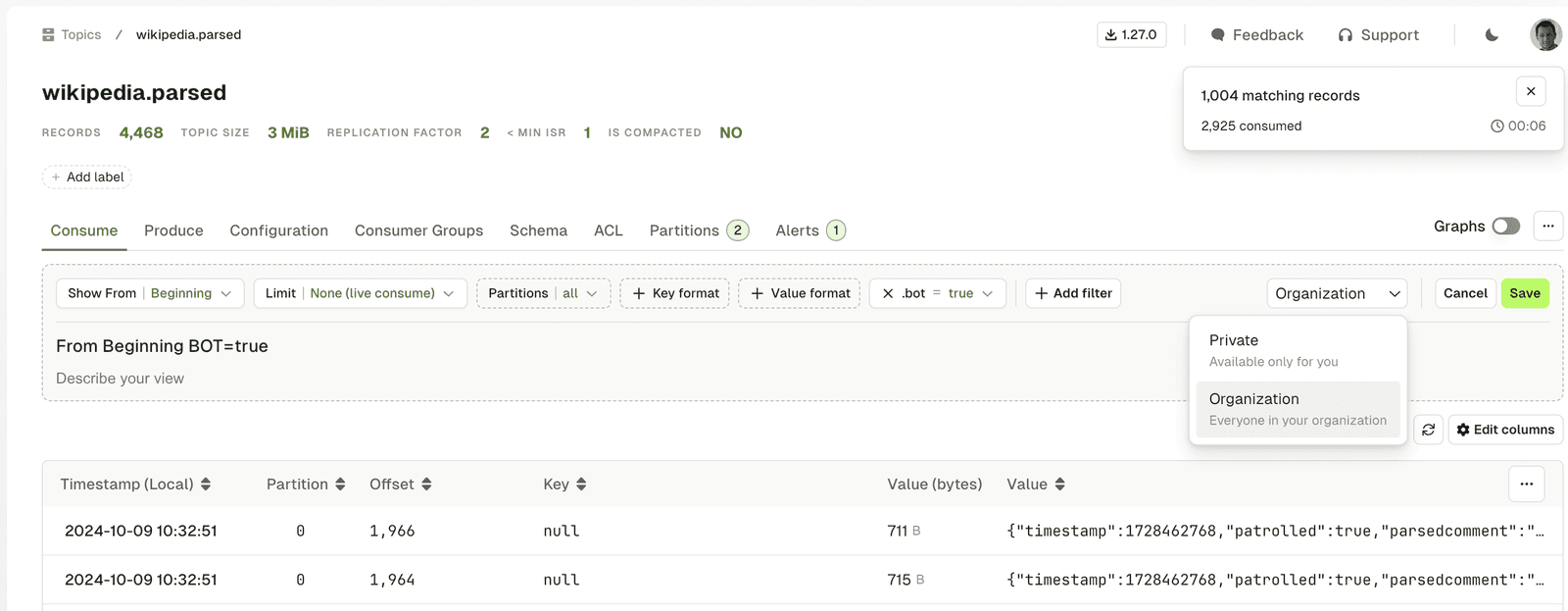

...Share consume page filters with teammates

Filters in the Topic Consume view are shareable. Save filters as Private or Organization-wide to ensure everyone works with the same data subset.

Export audit logs to Kafka

Publish audit log events to a Kafka topic in CloudEvents format. Consume the stream to trigger actions (like creating ServiceNow tickets) for specific events.

{

"source": "//kafka/kafkacluster/production/topic/website-orders",

"data": {

"eventType": "Kafka.Topic.Create",

"metadata": {

"name": "website-orders",

"cluster": "production"

}

},

"datacontenttype": "application/json",

"id": "ad85122c-0041-421e-b04b-6bc2ec901e08",

"time": "2024-10-10T07:52:07.483140Z",

"type": "AuditLogEventType(Kafka,Topic,Create)",

"specversion": "1.0"

}Deploy Console with multi-host PostgreSQL

Console's database now supports multi-host PostgreSQL for high availability.

For a full list of changes, read the complete release notes.