What Dash 2025 Revealed About AI Observability Security

Dash 2025 revealed AI observability risks—from exposed PII to agent telemetry attacks. How Conduktor + Datadog secure LLMs at scale.

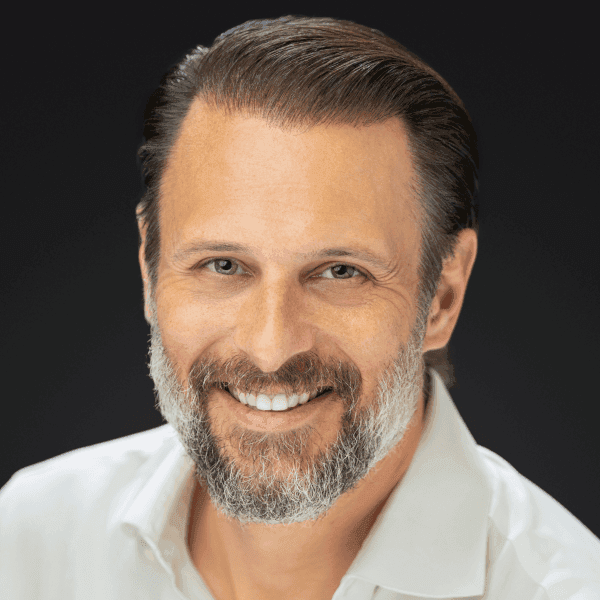

Datadog's annual conference in New York focused on observability for AI systems: LLMs, autonomous agents, and AI-powered monitoring tools.

The consistent message from keynotes, vendors, and attendees: monitoring AI and building AI into monitoring both create new security challenges. Telemetry data exposes sensitive prompts, PII, tokens, and business logic. AI tools introduce new attack vectors.

Security and governance must be built in from day one, not retrofitted.

Observability Expands the Attack Surface

Monitoring AI improves transparency but amplifies security risks. Logs, traces, and agent telemetry contain sensitive data that never appeared in traditional observability pipelines.

LLM dashboards, metrics pipelines, and integrated IDPs become attractive targets. MCPs and AI agents with real-time access to observability systems require secure broker layers to filter and control data flows.

AI systems also ingest erroneous inputs, producing unreliable outputs. Real-time data creates feedback loops: an agent acts on system data, generates new data, and throws itself into unstable behavior.

Five Security Challenges for AI Telemetry

Telemetry governance. Data must be tagged, masked, or redacted for privacy compliance without losing metric fidelity. Companies need visibility and compliance simultaneously.

Agentic AI anomalies. Autonomous agents require continuous monitoring. Poisoned data or misaligned context must be detected and removed before agents act on it.

AI data access control. Observability data and model execution histories need the same RBAC discipline as data at rest and in motion.

Infrastructure sprawl. Rapid AI deployment multiplies infrastructure across edge, on-prem, cloud, and hybrid. Each component requires consistent governance regardless of team or environment.

Cross-layer correlation. When suspicious behavior occurs, organizations need to correlate signals across application, infrastructure, and model layers while coordinating responses across teams and time zones.

Datadog's AI Observability Capabilities

Datadog's LLM Observability & Experiments feature simplifies agent execution, displays real-time model performance, and provides visibility into model drift. Their AI Agents Console monitors agentic security, performance, user engagement, and business value in a single interface.

Bits AI Security Analyst automates triage and response for AI-specific threats. Audit Trails and Access Controls trace user actions and lock suspicious accounts. Flex Logs' Frozen Tier stores voluminous data for seven years at ultra-low cost for historical analysis.

How Conduktor Adds Governance at the Stream Level

Conduktor provides in-stream governance and quality enforcement before data reaches observability pipelines.

At the topic level, Conduktor redacts sensitive payloads before ingestion. Pipelines never absorb or expose PII. Schema validation ensures data quality for LLM training data and inference traces. Kafka ACLs, metadata, and schema history export to SIEM systems, giving security solutions complete context beyond logs.

Securing AI Telemetry Now

Start with zero trust in pipelines. Tag, restrict, and encrypt observability data at rest and in motion.

Use Datadog and Conduktor MCP Servers together to validate access, redact prompts, and limit agent scope. Align teams on telemetry visibility policies.

Monitor the monitors. Audit logs, access analytics, and behavioral tracing reveal how observability systems themselves behave.

As AI-native architectures emerge, security must evolve alongside observability. Datadog provides deep visibility. Conduktor provides governance and control. Together they deliver the trust AI innovation demands.

Observability should be your AI's first line of defense, not your biggest insider threat.

Book a demo to see what Conduktor can do for your environment.