AI Agents Are Accessing Your Data Without Audit Trails

Conduktor Trust provides governance, audit trails, and policy enforcement for AI agents, RAG systems, and real-time operational data pipelines.

AI models now consume live operational data at unprecedented speed. They no longer just generate recommendations: they make business-critical decisions independently.

This is a massive opportunity. But creating complex, real-time pipelines without governance, visibility, or guardrails is risky. Enterprise teams are racing to connect operational data sources (streaming platforms, databases, transactional systems) directly into AI models. The infrastructure to govern, monitor, and secure these systems hasn't kept pace.

We've seen this pattern before: innovation accelerates, gaps in control emerge, and organizations scramble to build governance after the damage has occurred.

The Missing Control Plane for AI-Native Systems

At Conduktor, we've spent years helping enterprises unify and govern their operational data.

Today, advancements in AI are redefining the entire data stack:

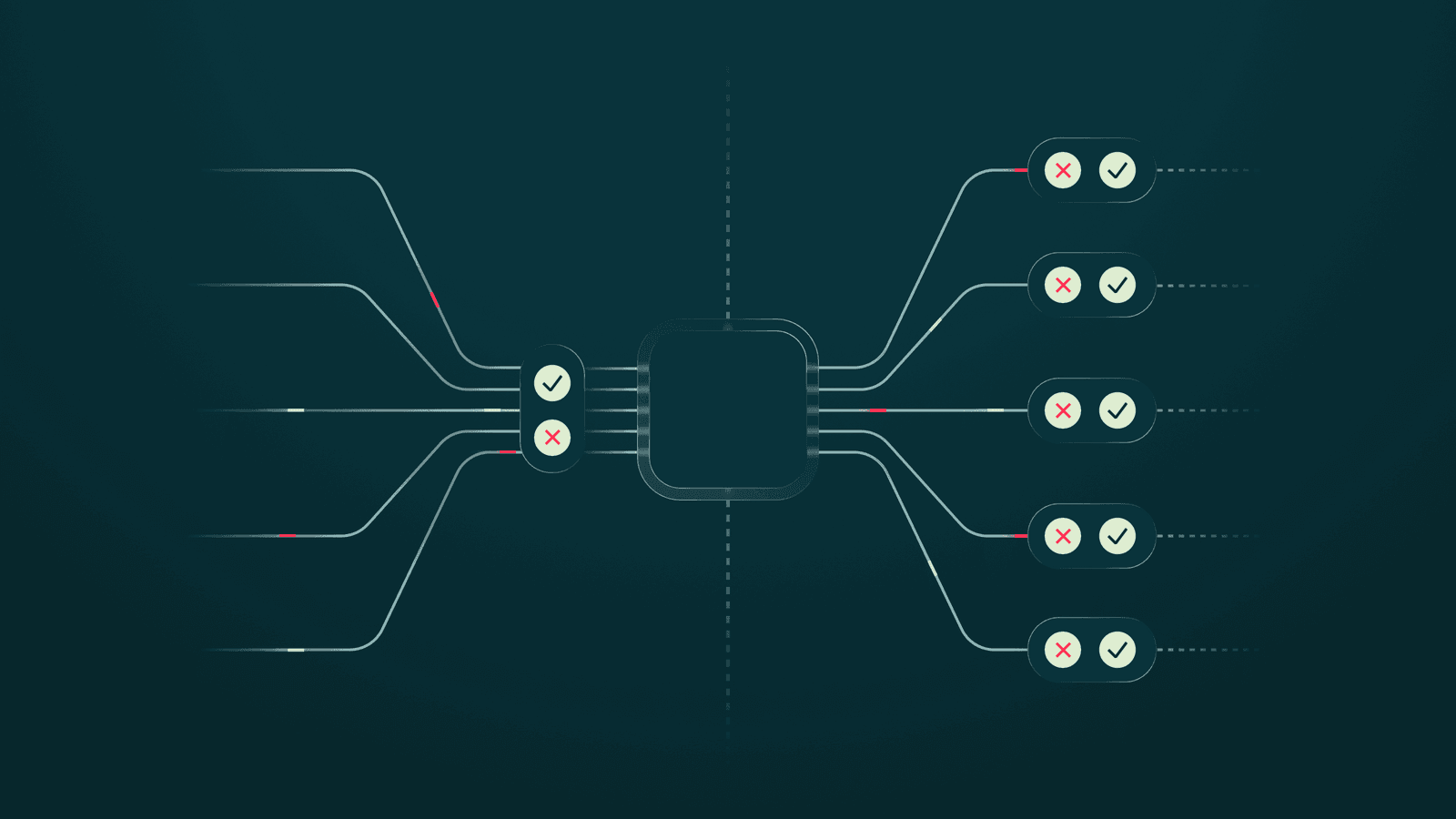

- Model Context Protocols (MCPs) enable algorithms to connect with external tools, services, and data, vastly widening their capabilities and enabling multi-step task execution.

- Agentic AI consists of networked AI agents that act independent of human supervision.

- Retrieval-augmented generation (RAG) continually queries live data, ensuring accuracy without retraining the entire model.

These developments raise critical questions:

- Which AI agents accessed which datasets?

- What decisions were made based on which data inputs?

- Were enterprise policies enforced in real-time agent interactions?

- How are we detecting and responding to unintended AI behaviors?

The control plane to answer these questions doesn't exist in most enterprises today.

Conduktor Trust: Governance for AI-Powered Systems

Conduktor Trust is the first platform designed to give enterprises full governance, observability, and policy control across real-time, AI-powered, operational systems. Whether agents are triggering operational workflows or RAG systems are querying live data, Trust enforces policies and creates audit trails at the point of action.

Trust is currently in preview. It gives enterprises:

- Full visibility into real-time, AI model-to-data interactions

- Policy enforcement embedded directly into AI agent workflows

- Audit trails across streaming data, databases, and retrieval pipelines

- The ability to identify and investigate data quality anomalies

- Guardrails that evolve as enterprise AI adoption scales

The goal: allow enterprises to deploy agentic AI and innovate safely without losing operational integrity.

Why AI Governance Matters Now

AI is no longer just augmenting data science teams. It's embedded directly into the operational fabric of business:

- Sales agents powered by LLMs

- Customer support copilots

- AI-assisted supply chain systems

- Real-time financial and risk management agents

As one CISO recently said: "We've given AI the keys to the operational kingdom. But we have no visibility into what rooms it's actually accessing."

Without operational control, AI-native systems become opaque, fragile, and dangerously self-reinforcing. Conduktor Trust gives enterprises the visibility, control, and confidence to operationalize AI safely.

Faster AI Deployments with Built-In Governance

Trust enables engineers to execute live AI audits across operational pipelines, reducing downstream policy violations and their associated costs. By baking in governance from the start, teams speed up time-to-production for AI agent deployments. This improves confidence across data, security, and compliance teams.

We're not chasing the AI hype cycle. We're focused on helping real enterprises address real operational risks emerging right now.

If you're building your enterprise AI roadmap and facing these control challenges, get in touch.