How to Scale Kafka Across Teams Without Losing Control

Scale Kafka across teams with federated governance and self-service data platforms. Tackle access management, security, and onboarding bottlenecks.

Data streaming is now a critical business requirement. Interconnected systems and devices generate events continuously, and data volumes grow fast. Organizations that can't react to real-time data fall behind. According to the 2023 State of Streaming report, 76% of organizations exploiting streaming data see 2x to 5x returns.

Streaming adoption spreads quickly. One successful project sparks interest across other teams who want the same latency improvements. But as streaming spreads across teams and business units, growing pains emerge.

Access Management Breaks Down First

Streaming data means handling PII and PCI data. Regulations require you to know how this data is used and by whom. You must encrypt data at rest, but Apache Kafka doesn't support this out of the box.

Developers need tooling to troubleshoot and debug in production. Access and permissions must be managed securely for both applications and humans. Most organizations underestimate this complexity until they're already scaling.

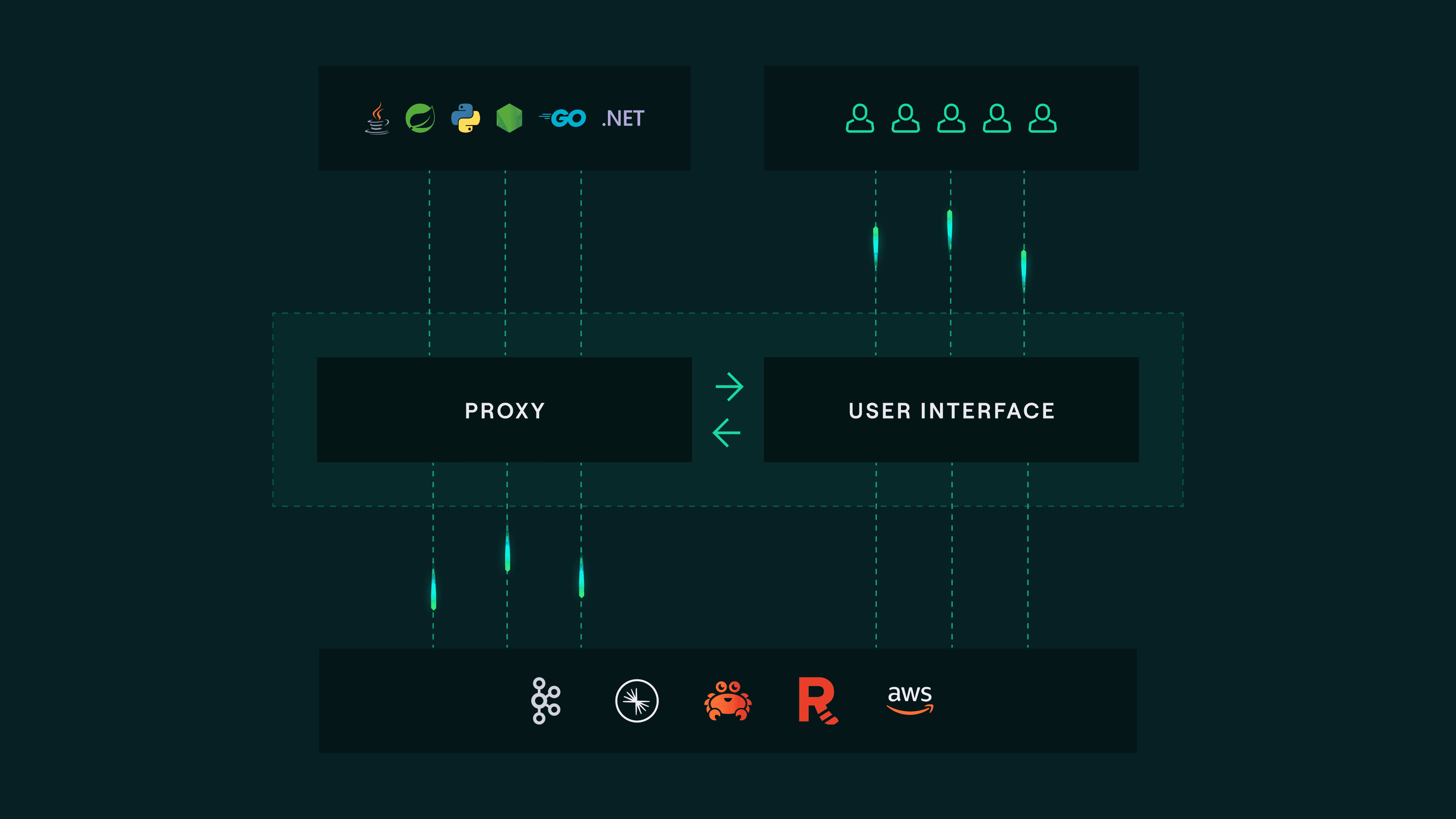

Platform Teams Become the Bottleneck

When data streaming becomes the backbone for data movement, it spawns many new projects. Each project requires creating new resources, typically served by a central platform team.

This team quickly becomes a bottleneck. They can't serve developer demands and business timelines. The result: frustrated developers, delayed projects, and pressure to cut corners on security.

Real-Time Systems Require Real-Time Reliability

The goal of streaming is real-time decision-making. This means serving use cases on the critical path of a company's business. The consequence of a production incident can be financial loss, reputation damage, or severely impacted customer experience.

Streaming applications and pipelines need robust testing. Teams need proactive alerts when anomalies occur. Most Kafka deployments lack both.

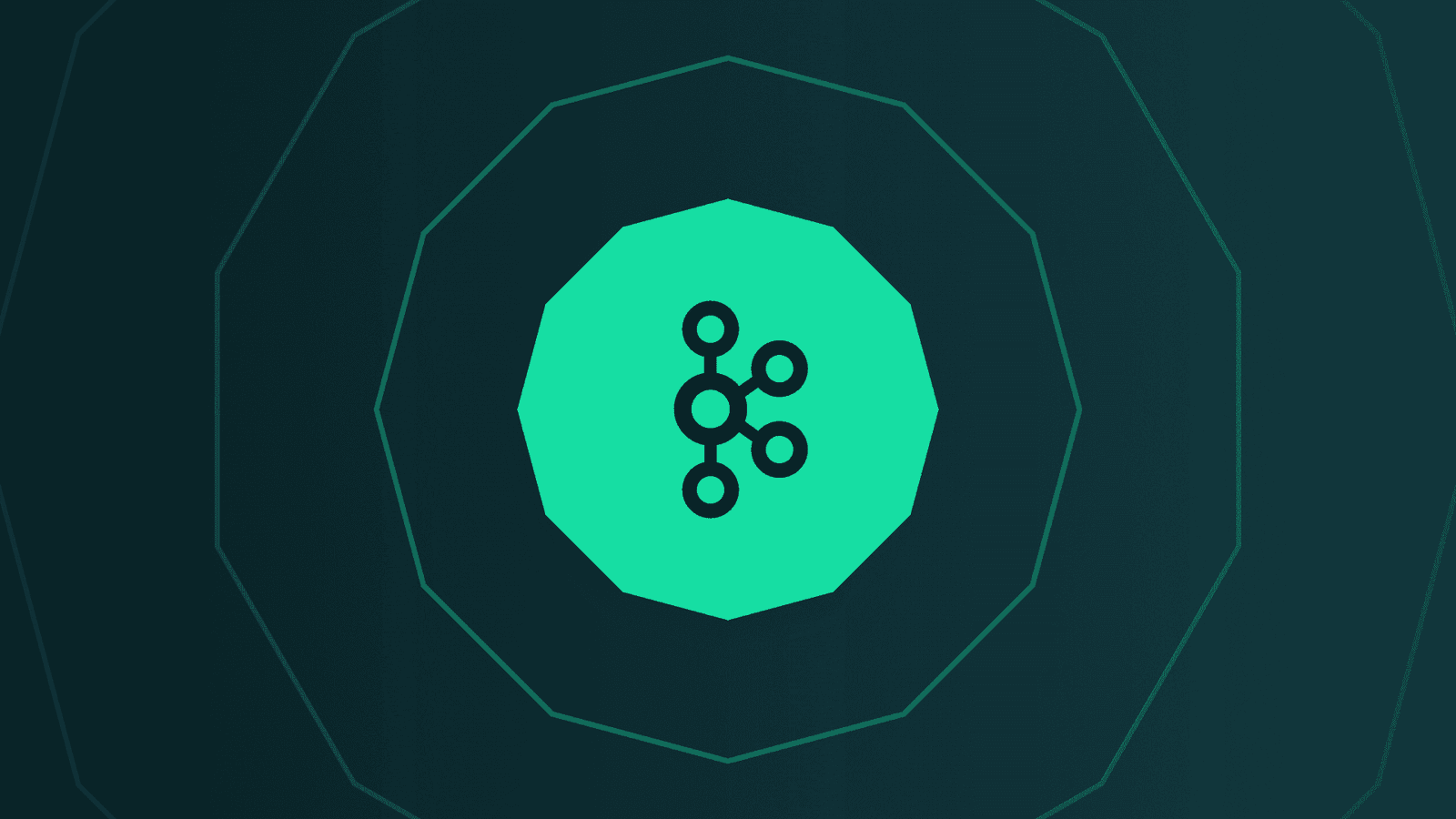

The Solution: Developer Autonomy with Organizational Control

Based on Conduktor's experience helping over 15,000 businesses scale data streaming, the answer is combining autonomy with guardrails.

A self-serve data platform drives efficient processes. Federated governance and security controls allow organizations to enforce overarching rules. Developers have the freedom to innovate quickly, create, and collaborate. Platform teams know that safeguards are in place to mitigate risk.

About Conduktor

Conduktor transforms how businesses interact with real-time data. Our software, built for organizations using Apache Kafka as data infrastructure, enhances security, improves data governance, and simplifies complex architectures.

We equip platform teams with tools that offer flexibility in enforcing organizational standards while enabling developer self-service.

Contact us to discuss your use cases. We are Kafka experts building out-of-the-box solutions for enterprises, and we want your feedback.